#

Kafka Cluster Installation on Linux

This tutorial explains to you how to install Apache Kafka Cluster on Linux. This tutorial explains step-by-step how to install Kafka Cluster on a Linux machine.

Once you have installed Zookeeper Cluster you can install Kafka Cluster as well.

Our environment has :

- 3 machines with Linux (CentOS 8) on them

- Zookeeper cluster is installed on them

Please take a look at the tutorial named Zookeeper cluster installation to see more details about the environment we use for this installation.

Here are the steps for installing Kafka Server in Cluster:

- Create Kafka Server data directory

mkdir -p /kafka/kafka_2.13-2.8.0/data/kafka- Add file limits configs - allow to open 100,000 file descriptors (as root user)

echo "* hard nofile 100000

* soft nofile 100000" | sudo tee --append /etc/security/limits.conf

reboot- Configure Kafka server properties

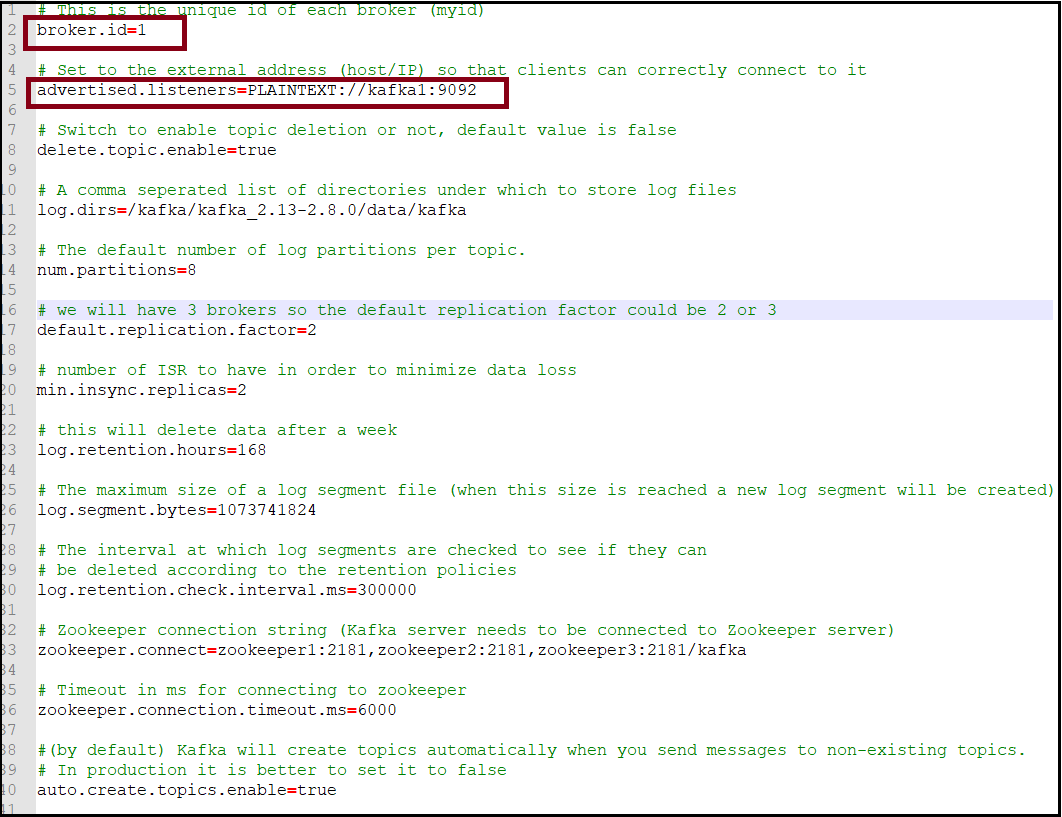

Take a look at the image below:

Put these parameters into the server.properties configuration file (in my case it is /kafka/kafka_2.13-2.8.0/config/ folder).

This file must be adapted for each Kafka server (the parameters which must modified for each server is marked in the image above).

In order to get this text easily, I will add the image content below:

# This is the unique id of each broker (myid)

broker.id=1

# Set to the external address (host/IP) so that clients can correctly connect to it

advertised.listeners=PLAINTEXT://kafka1:9092

# Switch to enable topic deletion or not, default value is false

delete.topic.enable=true

# A comma seperated list of directories under which to store log files

log.dirs=/kafka/kafka_2.13-2.8.0/data/kafka

# The default number of log partitions per topic.

num.partitions=8

# we will have 3 brokers so the default replication factor could be 2 or 3

default.replication.factor=2

# number of ISR to have in order to minimize data loss

min.insync.replicas=2

# this will delete data after a week

log.retention.hours=168

# The maximum size of a log segment file (when this size is reached a new log segment will be created)

log.segment.bytes=1073741824

# The interval at which log segments are checked to see if they can

# be deleted according to the retention policies

log.retention.check.interval.ms=300000

# Zookeeper connection string (Kafka server needs to be connected to Zookeeper server)

zookeeper.connect=zookeeper1:2181,zookeeper2:2181,zookeeper3:2181/kafka

# Timeout in ms for connecting to zookeeper

zookeeper.connection.timeout.ms=6000

#(by default) Kafka will create topics automatically when you send messages to non-existing topics.

# In production it is better to set it to false

auto.create.topics.enable=true- Start Kafka Server

Before you start Kafka server you must start the Zookeeper server. For this tutorial, we consider Zookeeper is already running. For more details about this subject you can take a look at the tutorial named Start/Stop Apache Kafka and Zookeeper.

cd /kafka/kafka_2.13-2.8.0

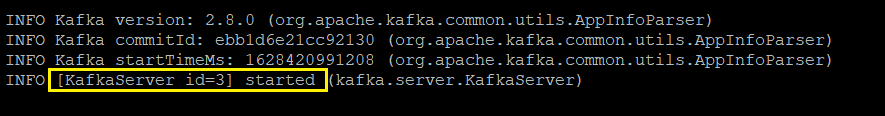

kafka-server-start.sh ./config/server.propertiesWhen Kafka server is running you will see something like this: