#

JAVA: Kafka Streams - Explanation & Example

This tutorial explains to you what is and how to create a Kafka Stream. This article has an example as well.

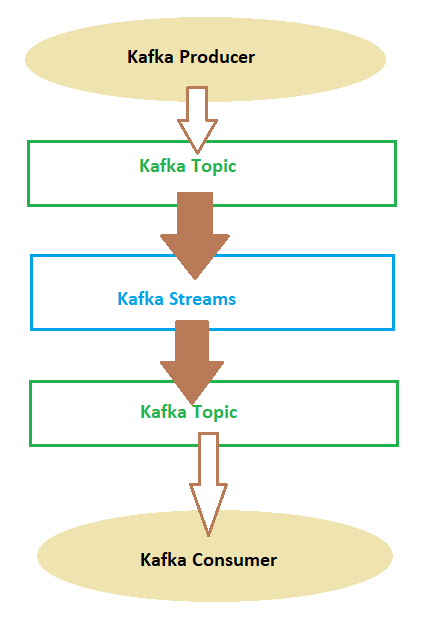

Kafka Streams is a Java library for building streaming applications, specifically applications that transform input Kafka topics into output Kafka topics (or call to external services, updates to databases, etc).

You can see this in the following image:

Kafka Streams are applications written in Java or Scala which read continuously from one or more topics and do things. These application work with some kinds of internal topics named streams. These streams create what the concept named "Kafka Topology". The Kafka Streams, as you can see in the picture, read data from a topic, filter, aggregate, modify, add data to the messages received from one or more topics and then, generally put that data on another topic.

You can see an example bellow.

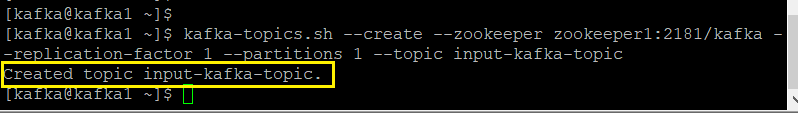

In order to test the Kafka Streams, you need to create an input topic where your application is listening for new messages.

You can use the following command:

kafka-topics.sh --create --zookeeper zookeeper1:2181/kafka --replication-factor 1 --partitions 1 --topic input-kafka-topic

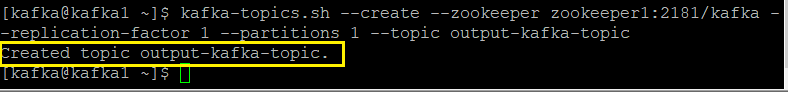

Now you can create the topic where Kafka Streams applications put the date to.

kafka-topics.sh --create --zookeeper zookeeper1:2181/kafka --replication-factor 1 --partitions 1 --topic output-kafka-topic

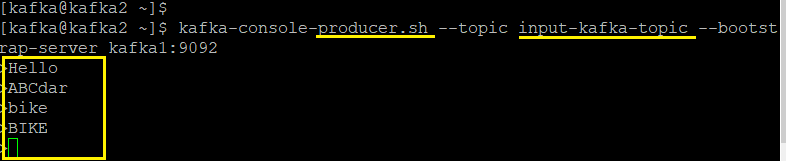

Using the following command you can put data into the first topic (from the console, for testing purpose):

kafka-console-producer.sh --topic input-kafka-topic --bootstrap-server kafka1:9092

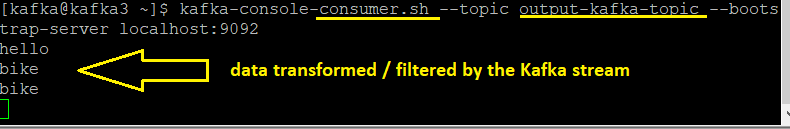

The Kafka Streams application do the following:

- filter the data : not to have "ABC" in the beginning of the message

- put all the characters in lowercase

So, the output topic will receive the following message:

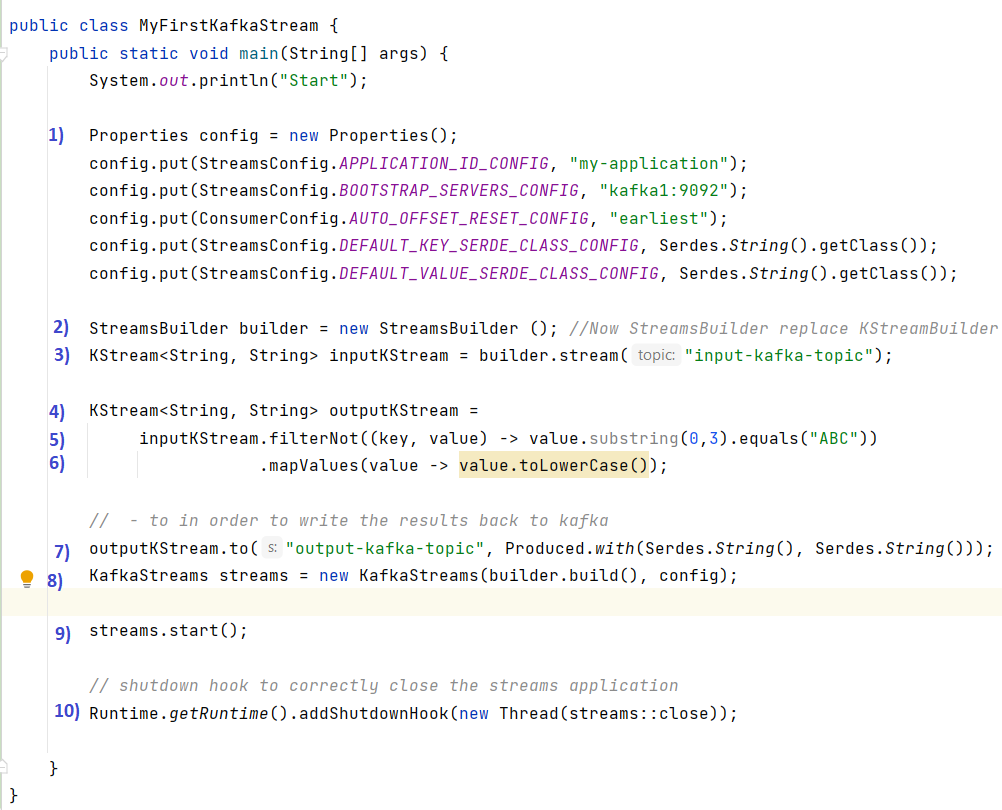

And here it is the Java code which is doing that:

And here it is the explanation:

- use the configuration to tell your application where the Kafka cluster is, which serializers/deserializers to use by default, to specify security settings and so on.

- create a Stream Builder

- create a KStream from a Kafka topic

- create a KStream from another KStream topic (because you cannot modify the messages from a stream - messages are immutable)

- add a filter to the first stream

- add a transformation to the first stream (after the filtering)

- put the result to another Kafka topic

- create the application streams

- start the streams

- shutdown hook to correctly close the streams application

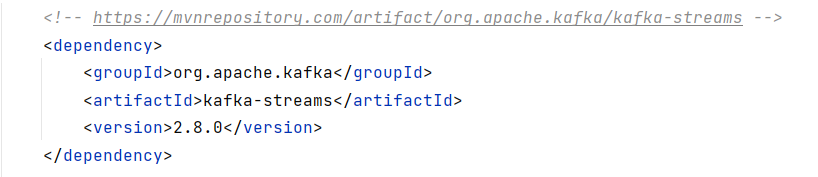

For this example you have to add the following Maven dependency: